Docker for Mocking? Yes!

Hardware simulation with Docker? The venerable deployment tool has a few tricks up its sleeve.

A couple of years ago I was asked by a client to "just finish a couple of features" for some desktop application they'd developed in-house. In consultancy circles we know this to be code for "can you make this well-intentioned but tech-debt laden project good enough to ship?".

The software in question is a Windows application developed in C#, that provided the user interface for the bigger project - a bespoke unmanned water craft, or ROV. In practice, the ROV is connected to the host computer via Ethernet over a long optical fibre umbilical cord. Naturally, spare ROVs for testing software, let alone a representative environment to test them in, were hard to come by. At the time the best available hardware was hundreds of kilometres away. But the operators there were willing to put it on a stand and connect it to the software. That way they could let me know over the phone how it was behaving, while it sensed the world around it and flapped its various peripherals like a fish out of water.

But if I were forced to condense all the lessons I learned over 20+ years of product development engineering into a sentence, it would be this:

Minimise your feedback loop

So it was clear to me that while the client's focus was on the two or three features that needed implementation, my challenge was to be able to test it, not develop it. "Works on my machine" wont fly here. To get this mission critical bit of software over the line, I needed to know not just whether the buttons on the UI ran my functions, or that the functions produced the correct combination of 1's and 0's, but that the system continued to provide situation awareness and machine control, even in the presence of dropped packets, resource starvation and errant signals.

Typically in embedded development we would address the challenges of testing with hardware-in-the-loop by "mocking" out the hardware - replacing the normal presence of hardware with software equivalents that fool the application into believing it's operating on real hardware. But in this case the hardware is essentially a LAN - a set of remote peripherals connected via various protocols over an Ethernet port, forming a Local Area Network. A good mock can still result in bad testability if it excludes (often unknown) characteristics of the hardware it is mocking. In this case, the presence of a LAN was a key characteristic of the system. So, I wondered, how do you mock a LAN?

After doing some investigation, I somewhat begrudgingly returned to Docker. My resistance was mostly due to the fact that Docker's exceptional capabilities come largely from imagining a world where the hardware doesn't matter. I've spent some time coming to terms with its inherent reluctance to admit nuances of the underlying hardware. But in this case, it turns out I wasn't so interested in the nuances of the hardware, only in the ability to emulate nodes on a network. Docker, it turned out, was a rather good fit.

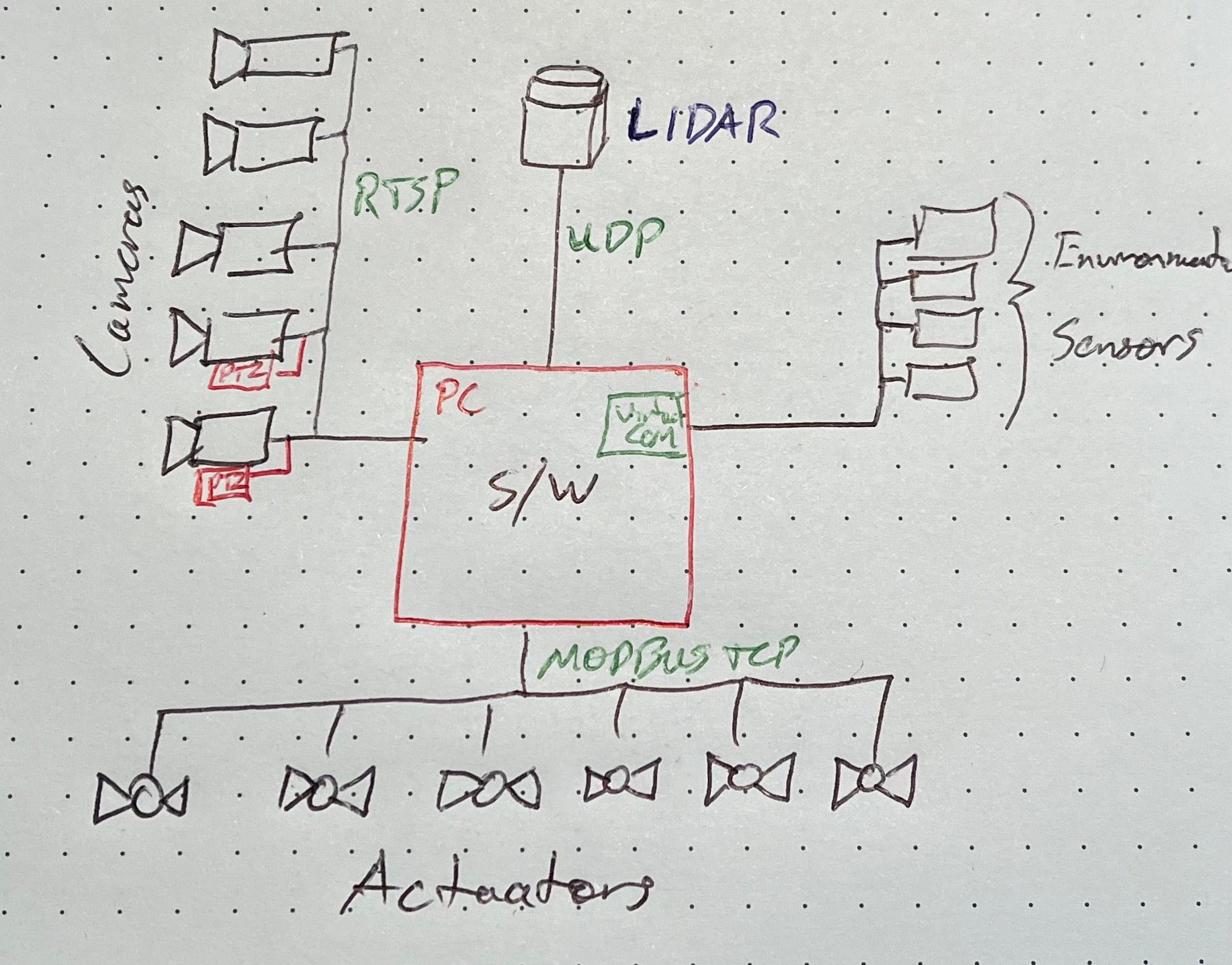

Ultimately the desktop software needed to communicate to the following nodes over a network:

- A UDP stream of point data from a 360° scanning Lidar.

- Serial data via virtual COM ports from four different multiple-signal sensors.

- Five camera feeds.

- The custom Pan-Tilt-Zoom (PTZ) control protocol on a subset of those cameras.

- Six MODBUS servers that control and monitor various actuators.

For 1, I had the physical Lidar used in practice. Connected over a real LAN, and sitting on a shelf with a field of view I could manipulate, the real thing was better than a mock.

For 2, to create real (albeit, virtual) COM ports I used com0com and wrote an emulator with a GUI that could run on the local machine to generate some configurable data profiles. The fact that the ports in practice are actually remote network nodes is unimportant for testing purposes, and the GUI has utility beyond software development.

For the rest I needed some ability to scale, and automate software control, to provide that sweet, sweet feedback during development. Running those mocks off target provided more accurate resource utilisation, and being able to bring them up and down independently was excellent for studying the all important make/break events.

A single Docker Compose script proved wonderfully sufficient. It consists of 15 services:

- A single RTSP server that the camera feed services stream through.

- The five camera stream services that create streams using

ffmpegand file footage. - A PTZ dependency service that builds the PTZ application.

- The PTZ server.

- A MODBUS dependency service that prepares common conditions for each of the MODBUS servers.

- The six MODBUS servers.

Apart from the compose script itself, there is very little custom development here. The RTSP server, the ffmpeg client and the MODBUS server are all provided by images found on Docker Hub. There is some configuration required, but only the PTZ server is custom. It's a very basic console C application that communicates like the real device would, in less than 100 lines of code. Developing it was an important exercise in understanding the protocol anyway.

The codename for the ROV was "Platypus". The mock therefore, was destined to be "Pretendypus". The project consists entirely of the compose script itself, plus the file footage, the MODBUS configuration files, a third party node application for merging them and the custom PTZ application. The compose script is here, warts and all:

# syntax=docker/dockerfile:1.4.3

#

# Pretendypus - the platypus emulator.

#

# Date: Dec 2022

# Author: Heath Raftery <heath@empirical.ee>

#

# Features:

# - Exposes 5 RTSP feeds on port 8554 at endpoints cam1 through cam5

# - Exposes 1 TCP server on port 32000

# - Exposes 6 MODBUS TCP servers on ports 5021 through 5026

#

# Usage:

# 1. On the development machine or a computer on the same

# network, run this compose script with `docker compose up`.

# 2. In the [Application] project, set the IP address and port of the

# feeds to the IP address of the machine running Pretendypus

# and the ports/endpoints listed in the "Features" section above.

# 3. Run the [Application] software to connect to Pretendypus.

#

volumes:

configs:

services:

rtsp-server:

ports:

- "1935:1935"

- "8554:8554"

- "8888:8888"

environment:

- RTSP_PROTOCOLS=tcp

image: aler9/rtsp-simple-server:v0.20.2

cam1: &cam

# Note: potential optimisation here using hardware acceleration, but hard to match with the host OS.

image: jrottenberg/ffmpeg:5.1.2-scratch313

links:

- "rtsp-server:rtsp-server"

depends_on:

- rtsp-server

volumes:

- ./videos:/v:ro

command: -re -stream_loop -1 -i /v/cam1.mp4 -c copy -f rtsp rtsp://rtsp-server:8554/cam1

cam2:

<<: *cam

command: -re -stream_loop -1 -i /v/cam2.mp4 -c copy -f rtsp rtsp://rtsp-server:8554/cam2

cam3:

<<: *cam

command: -re -stream_loop -1 -i /v/cam3.mp4 -c copy -f rtsp rtsp://rtsp-server:8554/cam3

cam4:

<<: *cam

command: -re -stream_loop -1 -i /v/cam4.mp4 -c copy -f rtsp rtsp://rtsp-server:8554/cam4

cam5:

<<: *cam

command: -re -stream_loop -1 -i /v/cam5.mp4 -c copy -f rtsp rtsp://rtsp-server:8554/cam5

ptz-prep:

#note: must be alpine gcc otherwise "not found" when the compiled binary is executed.

#Picked n0madic for the fixed tags, although it's broken after 9.2.0! becivells has

#tags, but no versions. Turns out 2022-12-16 is Alpine 3.17.0 and gcc 12.2.1 which

#is very fresh and fine, but has same "not found" on execution issue! frolvlad is

#weirdly popular and also works, but is 3 days old with no tags so may break at any

#point. Ichaia is obsolete. Oh what a mess Docker Hub is.

image: n0madic/alpine-gcc:9.2.0

working_dir: /app

volumes:

- ./scripts/ptz.c:/app/ptz.c:ro

- ./build/:/app/build/

command: gcc -o build/ptz ptz.c

ptz:

image: dellabetta/tcpserver

depends_on:

ptz-prep:

condition: service_completed_successfully

volumes:

# - ./build/ptz:/app/ptz #fails on Windows with "no such file or directory".

- ./build:/app #absolutely bizarre, couldn't figure it out, but this works instead so go with it.

ports:

- "32000:32000"

command: -v 0 32000 /app/ptz

modbus-prep:

image: node:19.2.0-alpine

working_dir: /app

volumes:

- ./modbus:/m_src:ro

- configs:/m

- ./scripts/json.js:/app/json.js:ro

# Merge each server config with the defaults previously pulled from the image.

# Note logLevel changed from DEBUG to INFO for sanity. Alas, we lose client activity.

command: /bin/sh -c "

for i in /m_src/server*.json; do

bi=`basename $$i`;

echo Preparing $$bi;

(cat /m_src/defaults.json; echo; cat $$i) | node json.js --deep-merge > /m/$$bi;

done"

modbus1: &modbus

image: oitc/modbus-server:1.1.2

restart: always

depends_on:

modbus-prep:

condition: service_completed_successfully

volumes:

- configs:/m:ro

ports:

- "5021:5020"

command: -f /m/server1.json

modbus2:

<<: *modbus

ports:

- "5022:5020"

command: -f /m/server2.json

modbus3:

<<: *modbus

ports:

- "5023:5020"

command: -f /m/server3.json

modbus4:

<<: *modbus

ports:

- "5024:5020"

command: -f /m/server4.json

modbus5:

<<: *modbus

ports:

- "5025:5020"

command: -f /m/server5.json

modbus6:

<<: *modbus

ports:

- "5026:5020"

command: -f /m/server6.json

And the result, looks like this:

The multiple video feeds really are streaming over the network, allowing assessment of network variability, responsiveness and resolution tradeoffs.

Here it is communicating with the PTZ mock. The mock has detailed logging, providing off-target monitoring of software behaviour.

And finally, here's the virtual COM port simulator with the associated view of the desktop software, viewed alongside the Docker control panel running on another computer, which allows services to be interrupted and restarted at will.

Apart from the usual frustrations getting predictable behaviour from Docker, its use in this application proved excellent. It has certainly opened my eyes to the possibilities for Docker as a testing tool, not just a deployment tool.